9.损失函数负反馈与优化器

损失函数

- 计算实际输出与目标之间的差距

- 为我们更新输出提供一定的依据(反向传播)

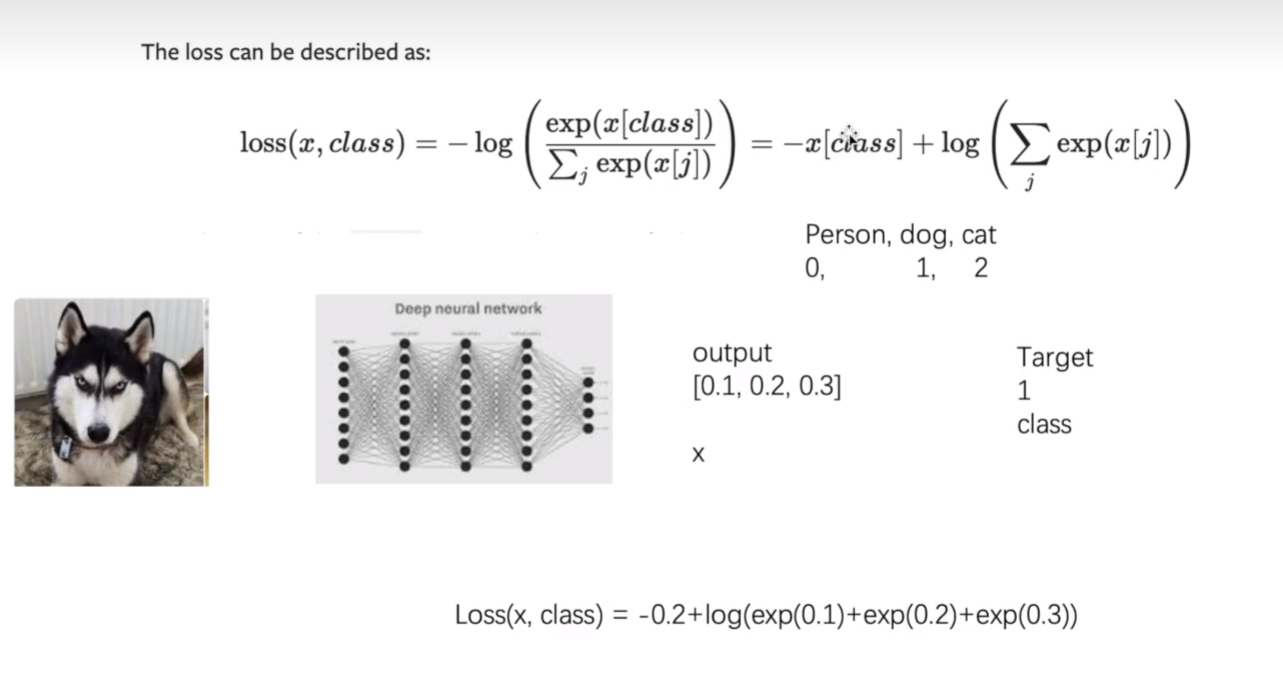

CROSSENTROPYLOSS(交叉熵)

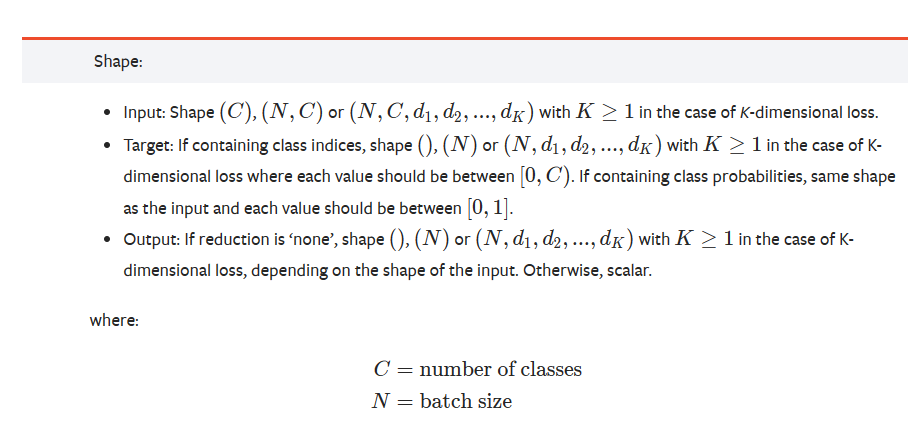

1 | CLASStorch.nn.CrossEntropyLoss(weight=None, size_average=None, ignore_index=- 100, reduce=None, reduction='mean', label_smoothing=0.0) |

- weight (Tensor,_ _optional) – a manual rescaling weight given to each class. If given, has to be a Tensor of size C

- size_average (bool,_ _optional) – Deprecated (see reduction). By default, the losses are averaged over each loss element in the batch. Note that for some losses, there are multiple elements per sample. If the field size_average is set to False, the losses are instead summed for each minibatch. Ignored when reduce is False. Default: True

- ignore_index (int,_ _optional) – Specifies a target value that is ignored and does not contribute to the input gradient. When size_average is True, the loss is averaged over non-ignored targets. Note that ignore_index is only applicable when the target contains class indices.

- reduce (bool,_ _optional) – Deprecated (see reduction). By default, the losses are averaged or summed over observations for each minibatch depending on size_average. When reduce is False, returns a loss per batch element instead and ignores size_average. Default: True

- reduction (str,_ _optional) – Specifies the reduction to apply to the output: ‘none’ | ‘mean’ | ‘sum’. ‘none’: no reduction will be applied, ‘mean’: the weighted mean of the output is taken, ‘sum’: the output will be summed. Note: size_average and reduce are in the process of being deprecated, and in the meantime, specifying either of those two args will override reduction. Default: ‘mean’

- label_smoothing (float, optional) – A float in [0.0, 1.0]. Specifies the amount of smoothing when computing the loss, where 0.0 means no smoothing. The targets become a mixture of the original ground truth and a uniform distribution as described in Rethinking the Inception Architecture for Computer Vision. Default: 0.00.0.

MSELOSS(平方差)

1 | torch.nn.MSELoss(size_average=None, reduce=None, reduction='mean') |

- size_average (bool,_ _optional) – Deprecated (see reduction). By default, the losses are averaged over each loss element in the batch. Note that for some losses, there are multiple elements per sample. If the field size_average is set to False, the losses are instead summed for each minibatch. Ignored when reduce is False. Default: True

- reduce (bool,_ _optional) – Deprecated (see reduction). By default, the losses are averaged or summed over observations for each minibatch depending on size_average. When reduce is False, returns a loss per batch element instead and ignores size_average. Default: True

- reduction (str, optional) – Specifies the reduction to apply to the output: ‘none’ | ‘mean’ | ‘sum’. ‘none’: no reduction will be applied, ‘mean’: the sum of the output will be divided by the number of elements in the output, ‘sum’: the output will be summed. Note: size_average and reduce are in the process of being deprecated, and in the meantime, specifying either of those two args will override reduction. Default: ‘mean’

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29#!/usr/bin/env python

# -*- coding: UTF-8 -*-

"""

@Project :Pytorch_learn

@File :loss.py

@IDE :PyCharm

@Author :咋

@Date :2023/7/5 18:33

"""

import torch

from torch.nn import L1Loss,MSELoss,CrossEntropyLoss

input = torch.Tensor([1,2,3])

target = torch.Tensor([1,2,5])

# L1loss

loss = L1Loss() # reduction="sum"

output = loss(input,target)

# MSELoss 平方差损失

loss_msl = MSELoss(reduction="sum")

output = loss_msl(input,target)

# CROSSENTROPYLOSS

crossentropyloss = CrossEntropyLoss()

output = CrossEntropyLoss(input,target)

print(output)

负反馈

主要是计算梯度,然后方便下面的优化器进行下一步的更新。

loss.backward()

1 | #!/usr/bin/env python |

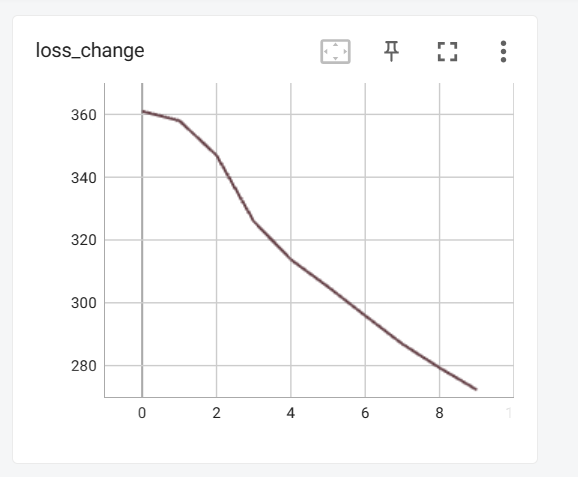

优化器

有多种优化器,其实本质就是一种参数更新的算法。

主要就是三步

- optim.zero_grad() # 梯度全部变成0

- loss_result.backward()

- optim.step()用的tensorboard可视化损失:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62#!/usr/bin/env python

# -*- coding: UTF-8 -*-

"""

@Project :Pytorch_learn

@File :optim.py

@IDE :PyCharm

@Author :咋

@Date :2023/7/13 10:20

"""

import torch

import torchvision

from torch.nn import Sequential, Conv2d, MaxPool2d, Linear, Flatten, Module

from torch.utils.data import DataLoader

from tensorboardX import SummaryWriter

dataset = torchvision.datasets.CIFAR10("CIFAR10",train=False,transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset=dataset,batch_size=64)

# 定义网络

class MyModule(Module):

def __init__(self):

super(MyModule, self).__init__()

self.model = Sequential(

Conv2d(3,32,5,padding=2),

MaxPool2d(2),

Conv2d(32,32,5,padding=2),

MaxPool2d(2),

Conv2d(32,64,5,padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024,64),

Linear(64,10),

)

def forward(self,x):

x = self.model(x)

return x

model = MyModule()

# 定义损失函数

loss = torch.nn.CrossEntropyLoss()

# 定义参数更新方式

optim = torch.optim.SGD(model.parameters(),lr=0.01)

write = SummaryWriter("log_5")

for epoch in range(10):

run_loss = 0

for i,data in enumerate(dataloader):

img,label = data

result = model(img)

# result = result.reshape((64,10))

loss_result = loss(result,label)

optim.zero_grad() # 梯度全部变成0

loss_result.backward()

optim.step()

run_loss += loss_result

print(run_loss)

write.add_scalar("loss change",run_loss,epoch)

print("OK!")

write.close()1

2

3

4

5

6

7

8

9

10

11

12Files already downloaded and verified

tensor(359.8933, grad_fn=<AddBackward0>)

tensor(353.3619, grad_fn=<AddBackward0>)

tensor(329.9563, grad_fn=<AddBackward0>)

tensor(313.8071, grad_fn=<AddBackward0>)

tensor(304.5399, grad_fn=<AddBackward0>)

tensor(295.0195, grad_fn=<AddBackward0>)

tensor(287.4640, grad_fn=<AddBackward0>)

tensor(280.7477, grad_fn=<AddBackward0>)

tensor(274.6153, grad_fn=<AddBackward0>)

tensor(269.1077, grad_fn=<AddBackward0>)

OK!

本博客所有文章除特别声明外,均采用 CC BY-NC-SA 4.0 许可协议。转载请注明来自 咋的个人博客!